Introduction:

Everyone in software talks about CI/CD these days. Continuous integration and continuous delivery merge code often, run some tests, and ship updates quickly. Sounds simple, right? In reality, it’s a headache. Builds blow up for reasons you can’t predict, half the tests are flaky, pipelines take forever, and when something goes wrong in deployment, you lose hours just trying to figure out what broke.

The truth is, CI/CD isn’t just about speed anymore. It’s about whether the whole thing can stay reliable when the system grows. That’s where AI in CI/CD Pipelines is starting to help. Not just automation for the sake of it, but actually spotting patterns from old builds, guessing what’s likely to fail, and taking some of the pain out of debugging. Basically, making pipelines a little smarter instead of just faster. Solutions like those developed at Payoda are helping teams make pipelines a little smarter instead of just faster through AI-Driven DevOps Automation.

Why CI/CD Still Feels Broken

Even with automation everywhere, the same old problems keep popping up:

- Tests that sometimes pass, sometimes fail, for no real reason → so you waste time re-running them

- Pipelines that crawl once the project grows big, because every extra stage adds more wait

- Builds blowing up over the smallest config slip or typo

- Deployments that feel like crossing fingers; you only notice the real issue once it’s live

Yeah, automation helped us move faster, but it didn’t make the whole thing any smarter. That’s where AI in CI/CD Pipelines might actually change the story.

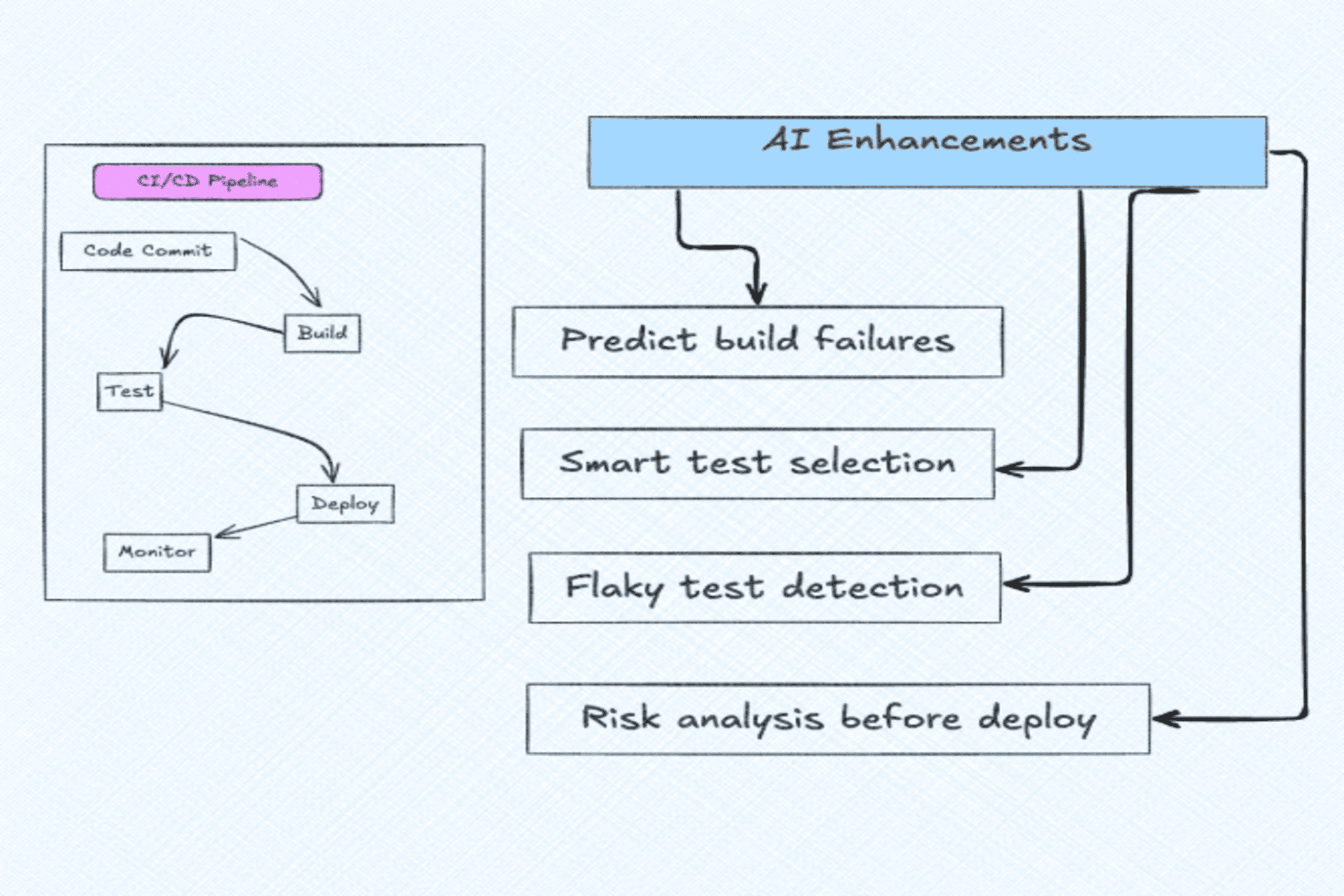

Where AI Fits Into CI/CD

Think of AI as an extra layer on top of your existing automation. It doesn’t replace Jenkins, GitHub Actions, or GitLab CI.

How AI Can Actually Help CI/CD

- Predict builds blowing up

Honestly, half the time you know a build’s gonna fail just by looking at what changed. AI-Driven DevOps Automation can actually check old logs and patterns and tell you upfront, “Hey, this one looks risky.” Better than wasting 30 mins on a doomed pipeline.

- Run tests that actually matter

Nobody wants to sit around while thousands of tests crawl. If only the stuff that changed got tested… Well, that’s what AI can figure out. Cuts time without cutting quality.

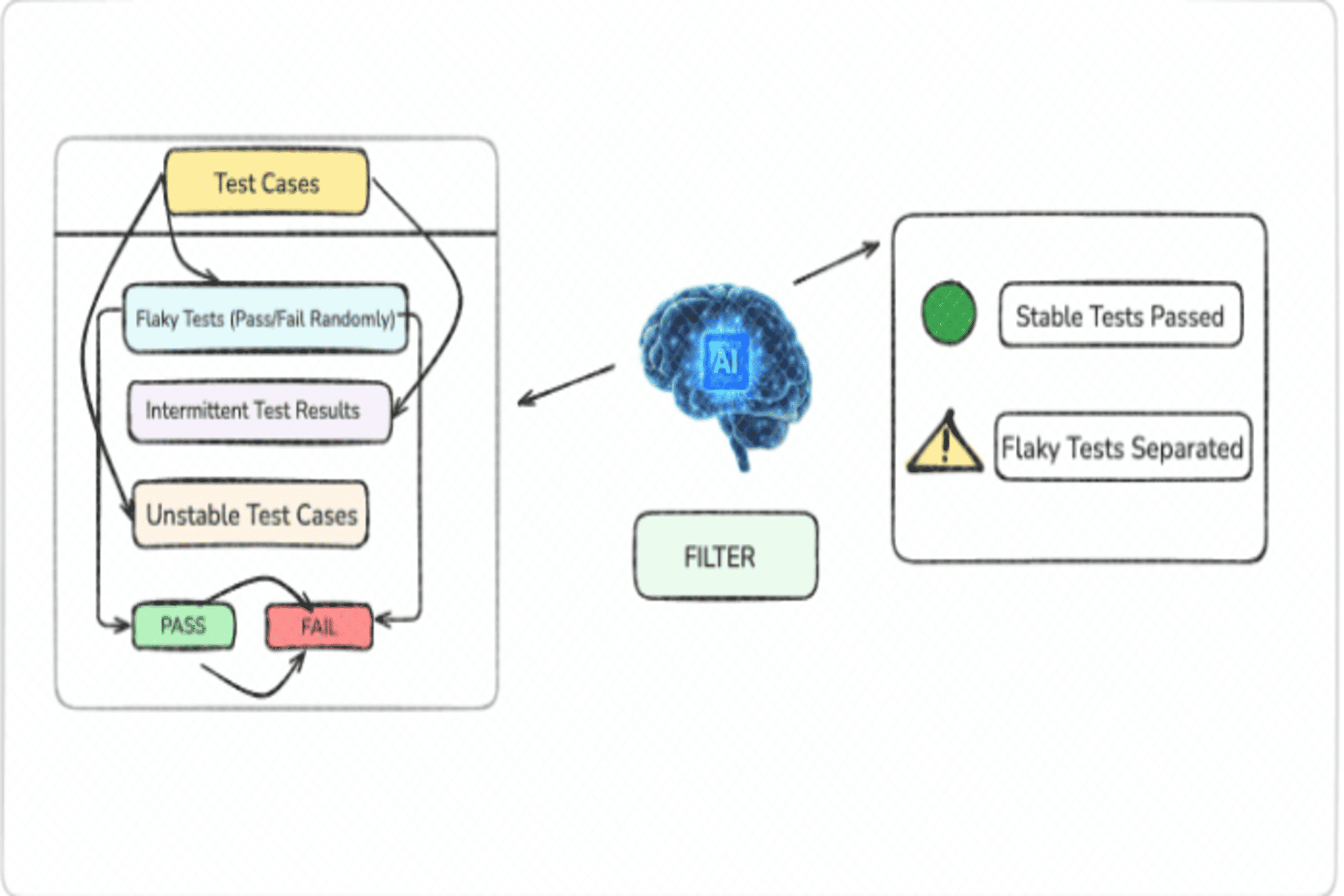

- Catch flaky tests (the real enemy)

You rerun a test three times—pass, fail, pass. Classic flake. AI can see those patterns and say, “This test is dodgy”. Then you either fix it or shove it aside instead of rerunning all night.

- Use infra smartly

Got five heavy builds in the queue? Spin up more agents. Quiet day? Scale down. AI handles that dance so you don’t burn cash or wait forever.

- Gut check before deploy

Deployments feel like rolling dice sometimes. AI can scan logs, metrics, and error rates and give you a heads-up: safe to go, or maybe hold off. Like a safety net before hitting the big red button.

Real Stuff Happening Already

This isn’t just slide-deck theory; companies are already messing with it:

- Meta (Facebook) uses AI to sniff out flaky tests so they don’t keep wasting machines rerunning junk.

- Microsoft shoved ML into Azure DevOps, and now it just tells you which tests are worth running after a code change.

- Google does this canary thing where AI watches a small rollout and decides if it’s safe to push everywhere. Pretty slick.

- And there are over a dozen startups screaming “AI copilots for DevOps” that hook straight into Jenkins or GitLab. Some of it works; some of it is hype.

What Teams Actually Notice with AI in CI/CD

- Faster feedback—honestly, you don’t wait forever anymore; only the tests that actually matter get triggered, so the pipeline feels lighter.

- Costs drop—no more paying for build agents just sitting idle; AI spins them up when needed and kills them when not.

- Quality’s better—fewer of those “works on my machine” flaky tests, and when a build fails, you get hints that actually make sense.

- Releases feel safer—before you push live, AI kind of runs a “gut check” on logs and metrics so you’re not gambling every deployment.

Benefits for Teams

- In short, AI in CI/CD Pipelines doesn’t just automate steps, it optimizes the entire flow.

Challenges and Limitations

Where AI Trips Up in CI/CD

Not gonna lie, it’s not magic. Kinda like bug triage, it’s messy too:

- Needs past data → if the system’s fresh, AI has nothing to learn from, so don’t expect it to help on day one.

- False calls → sometimes it screams “this build will fail,” and it doesn’t. After a while, devs roll their eyes.

- Hook-up pain → wiring AI into old pipelines is never clean. Always some config hell.

- Still need people → AI can say “skip this test,” but someone (human) has to sign off. Otherwise, it’s risky.

So yeah, it takes time. Feels rough at the start, but if you stick with it, the benefits are real.

Future Outlook: AI-Driven CI/CD

What Pipelines Might Look Like Soon

Honestly, it feels like they’ll just run themselves one day.

- Fixing their own mess → flaky test fails? AI just retries or tweaks it. You don’t even notice.

- Tests written for you → AI sees what changed in the code and spits out a couple of missing test cases. Not perfect, but better than nothing.

- Rollback on autopilot → if a deploy goes sideways, AI yanks it back before anyone’s even on Slack screaming.

- Talking to it → instead of writing scripts, you just say “rerun only DB tests”, and it does it. Like chatting with a bot.

We’re kind of moving from continuous delivery to something else, maybe “continuous intelligence”, if you want to call it that. The shift toward AI-Driven DevOps Automation will accelerate this evolution.

Practical Steps to Get Started

Thinking About Trying AI in CI/CD?

If your team wants to try this, don’t go all in on day one. Just start small:

- First, figure out what’s actually bugging you. Is it flaky tests, slow builds, or random failures? Pick one.

- Don’t reinvent the wheel; some stuff’s already out there (Azure DevOps, Harness, and Launchable have AI bits baked in).

- The easiest entry is monitoring; let AI flag weird patterns in logs/builds before you let it touch actual automation.

- Once you’re comfy, play with test selection. Tools like Launchable already do “run the tests that matter” pretty well.

- And yeah, track stuff. If build times drop or failures go down, you’ll know it’s worth it.

Over time, the helper piece turns into something you actually rely on.

Conclusion

CI/CD pipelines did the easy bit: automation. But the real pain points? Still there. Flaky tests chewing up time, builds running when they don’t need to, and deploys that feel like rolling dice.

This is where AI in CI/CD Pipelines and AI-Driven DevOps Automation are starting to patch the holes. It can guess when a build’s likely to blow up, run only the tests that matter, spin up infra when you need it, and throw a red flag if a release looks risky. Basically, it makes the pipeline less dumb.

End result? Faster ships, fewer “not again” moments, and systems that don’t fall over as easily.

And yeah, give it a bit of time and we probably won’t even say CI/CD anymore. It’ll just be AI-driven delivery pipelines that learn, adapt, and fix themselves.

Discover how Payoda can help transform your CI/CD workflows with AI.

FAQs

- What are the changes that AI can bring to my CI/CD pipeline that my current automation can't?

The current CI/CD setup is designed like a really efficient assembly line, which is good with following orders. It's excellent at what it does, but it'll only do what it is instructed to do. AI, though, brings a brain to the whole operation. It can do things like:-Figure out problems before they even show up. By looking at all your past builds and tests, AI can pick up on a super subtle pattern that hints a new bit of code might cause issues. It gives you a heads-up before you've even run the tests.-Make your pipeline a whole lot smarter. Instead of running every single test for every little code change, AI can figure out just which tests truly matter. That cuts down your build time like crazy and gets feedback to your team way faster.-Catch those really tough issues. AI can dig deeper beyond just the basic checks to find complex security flaws or performance problems that a simple script might most often miss.Basically, AI turns your pipeline from just a tool into something that keeps getting better on its own, learning with every single run.

- Is there a risk that AI will replace all DevOps engineers?

That is not going to happen. AI isn't here to DevOps engineers out; it's here to be your greatest support, your ultimate teammate. AI can take on the boring, repetitive, and data-intensive tasks, which frees DevOps engineers to really focus on the other pressing issues or find solutions for the next big problem there is. For instance, AI can:Figure out the underlying reasons for a bug in a matter of seconds. Instead of you spending hours digging through thousands of lines of logs, the AI can do all that detective work instantly.Handle your cloud resources perfectly. It can scale things up or down automatically based on what's needed at that moment.By doing the heavy lifting, AI lets you concentrate on the just the best parts of your job, like designing better systems and pushing innovation forward. The best DevOps teams down the road will be the ones who know how to work with AI, not against it.

- What's the hardest part about adding AI to my pipeline?

While the upside is huge, actually getting started can be a bit tricky. The main hurdles you'll probably hit are:-The data mess. AI models are only good if the data you give them is good. If your old pipeline data is all over the place or missing pieces, the AI won't learn much. It's kind of like trying to teach someone with a textbook full of typos.-The knowledge gap. You don't just need DevOps skills; you also need to get a handle on data science and machine learning. That's a new combination for lots of teams, so you might need to bring in new people or find tools that simplify the whole process for you.-The "black box" problem. Sometimes, it can be really hard to understand why an AI made a certain call. If a model says some code is high-risk, you need to feel confident in its judgment. It's super important to make sure your AI is clear about what it's doing and that you've considered any biases it might have picked up from old data.

Talk to our solutions expert today.

Our digital world changes every day, every minute, and every second - stay updated.